Data Augmentation

Data Augmentation

Introduction

Data augmentation is a technique used to artificially increase the size and diversity of training datasets by creating modified versions of existing data. It's particularly valuable when you have limited training data, want to improve model generalization, or need to address class imbalance. By generating new training examples through various transformations, data augmentation helps models learn more robust features and reduces overfitting.

Why Data Augmentation Matters

The Data Scarcity Problem

- Limited datasets: Real-world data collection is often expensive and time-consuming

- Overfitting: Small datasets can lead to models that memorize rather than generalize

- Class imbalance: Some classes may have significantly fewer examples than others

- Domain gaps: Training data may not cover all possible variations in real-world scenarios

Benefits of Data Augmentation

- Increased dataset size: More training examples without additional data collection

- Improved generalization: Models learn to handle variations in input data

- Reduced overfitting: Regularization effect through data diversity

- Better robustness: Models become less sensitive to small changes in input

- Class balance: Can help address imbalanced datasets

Types of Data Augmentation

Various data augmentation techniques for different data types

Various data augmentation techniques for different data types

1. Geometric Transformations

Rotation

Rotate data points by random angles around a center point.

When to use:

- 2D spatial data

- Image-like data representations

- When orientation invariance is desired

Parameters:

- Maximum rotation angle

- Rotation center (usually origin)

Pros:

- Simple to implement

- Preserves data structure

- Good for orientation invariance

Cons:

- Limited to 2D+ data

- May create unrealistic samples

- Can change semantic meaning

Scaling

Multiply data points by random scaling factors.

When to use:

- When size invariance is important

- Numerical data with meaningful magnitudes

- Time series with amplitude variations

Parameters:

- Scaling range (e.g., 0.8 to 1.2)

- Uniform vs per-dimension scaling

Pros:

- Preserves relative relationships

- Simple to implement

- Good for size invariance

Cons:

- May create unrealistic magnitudes

- Can affect feature distributions

- Requires careful range selection

Translation

Shift data points by random offsets.

When to use:

- Spatial data

- When position invariance is desired

- Time series with baseline shifts

Parameters:

- Translation range

- Per-dimension vs uniform translation

Pros:

- Preserves data shape

- Good for position invariance

- Maintains feature relationships

Cons:

- May move data outside valid ranges

- Can create boundary effects

- Requires domain knowledge for ranges

2. Noise-Based Augmentation

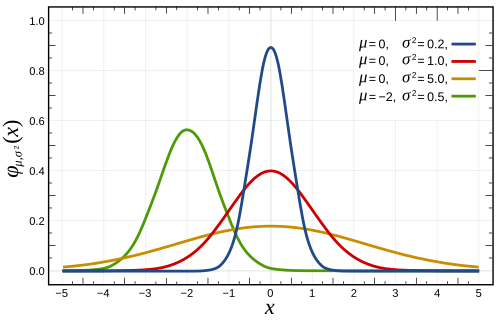

Gaussian Noise Addition

Adding Gaussian noise to create variations

Adding Gaussian noise to create variations

Add random Gaussian noise to data points.

Formula: x_aug = x + ε, where ε ~ N(0, σ²)

When to use:

- Continuous numerical data

- When small perturbations are realistic

- To improve robustness to measurement noise

Parameters:

- Noise level (standard deviation)

- Feature-specific vs uniform noise

Pros:

- Simple and fast

- Good regularization effect

- Preserves data distribution shape

- Works with any data type

Cons:

- May create unrealistic samples

- Can blur important features

- Requires careful noise level tuning

3. Synthetic Sample Generation

SMOTE (Synthetic Minority Oversampling Technique)

SMOTE generates synthetic samples between existing minority class samples

SMOTE generates synthetic samples between existing minority class samples

SMOTE creates synthetic samples by interpolating between existing minority class examples.

Algorithm:

- For each minority sample, find k nearest neighbors

- Select random neighbor

- Generate synthetic sample along the line connecting them

- Repeat until desired balance is achieved

Formula: x_synthetic = x + λ(x_neighbor - x), where λ ∈ [0,1]

When to use:

- Imbalanced classification problems

- When minority class samples are clustered

- Supervised learning tasks

Parameters:

- k (number of neighbors)

- Oversampling ratio

- Distance metric

Pros:

- Addresses class imbalance effectively

- Creates realistic synthetic samples

- Preserves class boundaries

- Well-established technique

Cons:

- Can create overlapping classes

- May generate noise in high dimensions

- Requires labeled data

- Computationally expensive for large datasets

Mixup

Mixup creates virtual training examples by mixing pairs of samples

Mixup creates virtual training examples by mixing pairs of samples

Mixup creates virtual training examples by linearly interpolating between pairs of samples and their labels.

Formula:

x_mix = λx_i + (1-λ)x_jy_mix = λy_i + (1-λ)y_j

Where λ ~ Beta(α, α)

When to use:

- Deep learning applications

- When smooth interpolation makes sense

- Classification with soft labels

Parameters:

- α (Beta distribution parameter)

- Mixing strategy (random pairs vs strategic)

Pros:

- Improves generalization

- Reduces overfitting

- Works well with neural networks

- Provides smooth decision boundaries

Cons:

- May create unrealistic samples

- Requires soft label support

- Can be computationally expensive

- May not work well for all data types

Domain-Specific Augmentation

Image Data

- Flipping: Horizontal/vertical flips

- Cropping: Random crops and resizing

- Color: Brightness, contrast, saturation adjustments

- Blur: Gaussian blur, motion blur

- Elastic deformation: Non-linear transformations

Text Data

- Synonym replacement: Replace words with synonyms

- Random insertion: Insert random words

- Random deletion: Remove random words

- Back translation: Translate to another language and back

Time Series

- Time warping: Stretch or compress time axis

- Magnitude warping: Scale amplitude

- Window slicing: Extract random subsequences

- Jittering: Add temporal noise

Audio Data

- Pitch shifting: Change fundamental frequency

- Time stretching: Change speed without pitch

- Noise addition: Add background noise

- Spectral masking: Mask frequency bands

Choosing the Right Augmentation Strategy

Decision framework for choosing augmentation methods

Decision framework for choosing augmentation methods

Decision Framework:

- What type of data do you have?

- Images → Geometric + photometric transformations

- Text → Linguistic transformations

- Numerical → Noise + geometric transformations

- Time series → Temporal transformations

- What invariances do you want?

- Translation → Use translation augmentation

- Rotation → Use rotation augmentation

- Scale → Use scaling augmentation

- Noise → Use noise addition

- Do you have class imbalance?

- Yes → Consider SMOTE or class-specific augmentation

- No → Use general augmentation techniques

- How much data do you have?

- Very little → Aggressive augmentation

- Moderate → Balanced augmentation

- Plenty → Light augmentation for regularization

- What's your computational budget?

- Limited → Simple transformations (noise, scaling)

- Moderate → Geometric transformations

- High → Advanced methods (SMOTE, Mixup)

Best Practices

Design Principles

- Domain knowledge: Ensure augmentations make sense for your domain

- Preserve semantics: Don't change the meaning of the data

- Realistic variations: Generate plausible samples

- Balance: Don't over-augment to the point of losing original patterns

Implementation Guidelines

- Validation strategy: Use proper cross-validation with augmented data

- Augmentation timing: Apply during training, not validation/testing

- Parameter tuning: Experiment with different augmentation parameters

- Monitoring: Track how augmentation affects model performance

Common Pitfalls

- Data leakage: Don't augment validation/test data

- Over-augmentation: Too much augmentation can hurt performance

- Unrealistic samples: Ensure augmented data is plausible

- Ignoring domain constraints: Respect physical/logical constraints

Evaluation and Validation

Metrics to Track

- Dataset size increase: How much data was generated

- Class balance improvement: Reduction in class imbalance

- Model performance: Accuracy, precision, recall on test set

- Generalization: Performance on unseen data

- Training stability: Convergence and variance across runs

Validation Strategies

- Hold-out validation: Keep original test set unchanged

- Cross-validation: Ensure augmentation doesn't leak across folds

- Ablation studies: Compare with and without augmentation

- Parameter sensitivity: Test different augmentation parameters

Advanced Techniques

Learned Augmentation

- AutoAugment: Automatically learn augmentation policies

- GANs: Use generative models to create new samples

- Variational autoencoders: Generate samples in latent space

Adaptive Augmentation

- Curriculum learning: Start with simple augmentations, increase complexity

- Dynamic augmentation: Adjust augmentation based on training progress

- Sample-specific: Different augmentations for different samples

Multi-modal Augmentation

- Cross-modal: Use one modality to augment another

- Synchronized: Maintain consistency across modalities

- Complementary: Different augmentations for different modalities

Real-World Applications

Computer Vision

- Medical imaging: Augment rare disease cases

- Autonomous driving: Generate diverse traffic scenarios

- Quality control: Create variations of defect patterns

Natural Language Processing

- Sentiment analysis: Generate paraphrases with same sentiment

- Machine translation: Create diverse translation examples

- Question answering: Generate question variations

Healthcare

- Drug discovery: Generate molecular variations

- Diagnostic imaging: Augment rare condition examples

- Electronic health records: Generate synthetic patient data

Finance

- Fraud detection: Generate synthetic fraud patterns

- Risk modeling: Create stress test scenarios

- Algorithmic trading: Generate market condition variations

Key Takeaways

- Data augmentation is powerful for improving model performance with limited data

- Choose augmentations carefully based on domain knowledge and desired invariances

- Balance is key - too little augmentation wastes potential, too much can hurt performance

- Validate properly to ensure augmentation actually helps your specific task

- Consider advanced methods like SMOTE for imbalanced data or Mixup for deep learning

- Monitor semantic preservation to ensure augmented samples remain meaningful

- Experiment systematically with different techniques and parameters

Interactive Exploration

Use the controls to:

- Switch between different augmentation methods

- Adjust augmentation parameters and intensity

- Observe how different techniques affect data distribution

- Compare original vs augmented dataset characteristics

- Explore the trade-offs between dataset size and sample quality